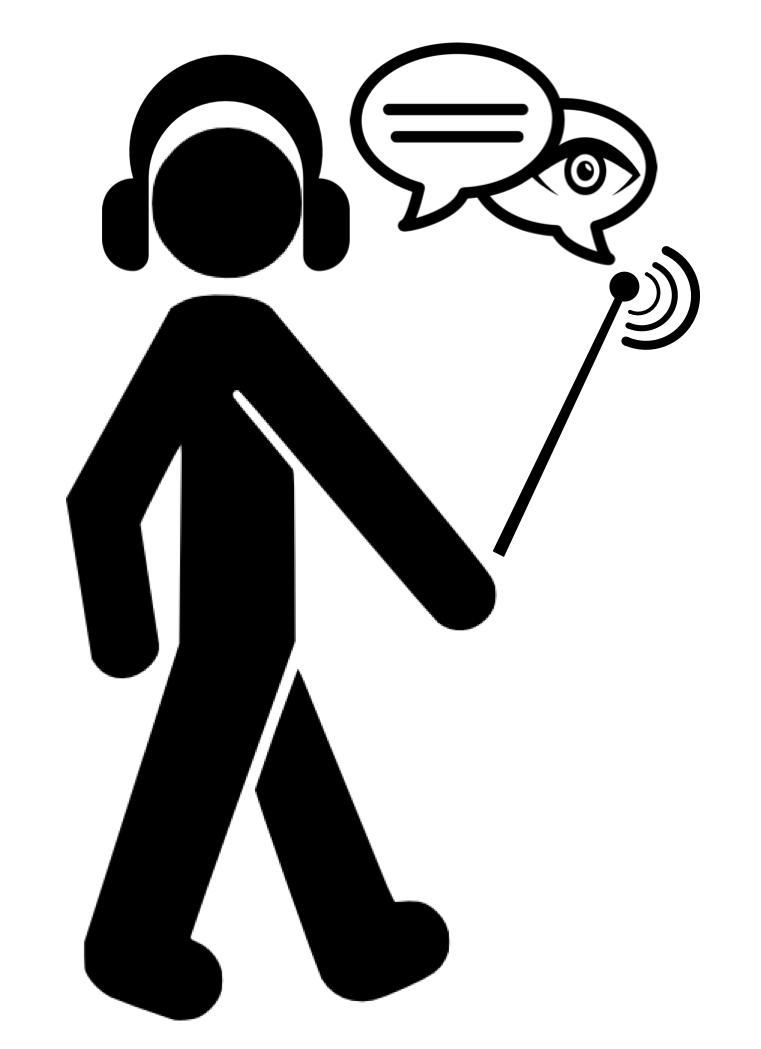

The advent and availability of affordable 3D depth sensors has created new opportunities for 3D scene understanding and navigation for the visually impaired. Examples of such sensors include RealSense, PrimeSense, and other time of flight or structured light sensors that are in the price range of $150. These 3D sensing devices have already been integrated in systems such as Hololens from Microsoft, Magic Leap headset, and other mixed reality devices available in the market today. In this project, we combine low cost 3D sensing devices with smartphones in order to develop an app that transcribes 3D scenes to the visually impaired the same way as captions describe a 2D images. We envision this app to be used by the visually impaired in several ways: first, it can be used for 3D navigation in the real world to avoid obstacles thus obviating the need for a cane. Second, it can be used to understand the scene the user is situated in by describing the objects and human in the scene, the relative relationships between them and the actions and activities they are engaged in. This description would then be communicated to the user primarily through audio. The technical milestones to build the above system can be summarized as follows: (a) integrate and interface 3D sensor with mobile app; (b) synchronize 3D data captured from depth sensor with RGB data captured by the phone camera; (c) generate 3D model of the world in the form of a colored point cloud, or colored mesh; (d) semantically segment the point cloud and mesh in order to label objects in the 3D scene; (e) Translate the semantic parsing into sentences describing the scene to be communicated to the user via audio; (f) build a 3D library of spaces visited frequently by the visually impaired such that when the person revisits them, s/he can be re-localized within the scene accurately. Of the above steps, we have so far completed steps (a) and (b) and are halfway done with step (c). The bulk of our effort during the course of this project will be on steps (d), (e), and (f) which heavily use AI and machine learning methodologies. As for step (d), there is an ongoing project in my lab at UC Berkeley on 3D semantic segmentation using RGB and depth data for autonomous driving. We will leverage this expertise in order to develop 3D indoor scene segmentation methods. As for Step (f), we leverage our work on image based localization and loop closure detection in SLAM algorithms over the past decade. As for step (e), we will follow the existing approaches in image caption in order to arrive at rich 3D description of a scene similar to the way movies are transcribed for the visually impaired.

We plan to build a device to be carried by the visually impaired to help them transcribe indoor spaces in 3D. This is accomplished by depth and RGB sensors integrated into a smart phone. We leverage AI tools at multiple levels in our project. First, we apply deep learning algorithms that use depth and RGB data as input to semantically segment each captured RGB-D frame. Second, we fuse the result of semantic segmentation across time as new frames become available. This allows us to incrementally enhance and update the model of the scene as the camera is moved by the visually impaired user. In other words, the semantic predictions from multiple viewpoints are probabilistically fused into a dense semantically annotated map. Third we develop "3D to language" methods to convert the semantic segmentation of the scene into sentences to be communicated via audio to the user. The first and second problems require extensive use of machine learning techniques. We use convolutional neural network to semantically segment each RGB-D frame. In particular, we plan to use a VGG 16-layer network with the addition of max unpooling and deconvolutional layers which are trained to output a dense pixel-wise semantic probability map. In doing so, we use four channels to input data to our CNN, 3 for RGB and one for depth. We will use a variety of 3D indoor data sets such as S3DIS and NYU and SUNCG data for training, but test in real life environments such as home, office, gym, etc. Our goal is to run semantic segmentation at 25 frames per second or higher and provide verbal cues to the visually impaired person to describe (a) objects in the scene (b) relative geometry of the objects to each other and to the user; (c) humans in the scene (d) activities humans are engaged in, e.g. walking running, eating, using activity recognition; We "sync" the device carried by the user on a daily basis so that the 3D scenes of the spaces s/he has visited during a day is downloaded to a cloud storage. Over time a library of all the places the user has visited will be accumulated in the cloud. This repository can be used in two ways: to update the existing models in the database upon subsequent revisits; and to leverage reconstructions of a given space from prior visits in order to speed up real time scene construction of the same space in the current visit. This is a plausible approach since most people's daily life centers around finite number of indoor locations. We plan to use machine learning methods to detect "revisits" during the above processes. This step of the project is quite challenging and as such is a stretch goal. We will use PyTorch within Azure Machine Learning Service. We will use the hyper parameter tuning services in order to improve the accuracy of our semantic segmentation. We hope to use GPU capabilities of Azure to deal with the large amount of training needed for our system.