Automated, Scalable, Airborne Only, 3D Modeling

Min Ding, Kristian Lyngbaek and Avideh Zakhor

Textured 3D city models are needed in many applications such as city planning, 3D mapping, and photo-realistic fly and drive-throughs of urban environments. While combined ground/aerial modeling results in photorealistic modeling suitable for both virtual walkthrough and drive-throughs, generating such models is extremely time consuming in that every street needs to be driven through and mapped properly. In contrast, airborne-only modeling can potentially be done extremeley efficiently since flying over a region is much faster than driving within it. As such, airborne-only modeling could result in fast, scalable 3D mapping of major urban areas in the globe.

3D model geometries are typically generated from stereo aerial photographs or range sensors such as Lidars. Fast, automated mapping of detailed aerial textures on the 3D geometry models is a challenging problem and the subject of this project. Traditionally, this has been done by manual correspondence between landmark features in the 3D model and the 2D imagery via a human operator. This approach is extremely time-consuming and does not scale to large regions. Frueh et al. [1] developed an approach in 2004 which would start with a coarse GPS/INS readout and refine it via an exhaustive search approach. This technique would take approximately 20 hours per image on today's computers.

In this project, we have developed a scheme which can automatically register

aerial imagery onto 3D geometry models in a matter of minutes instead of hours.

We start with a coarse GPS/INS readout and then refine the pose by applying a

series of algorithms including: (a) vanishing point detection; (b) 2D corner

detection and correspondence between 3D model and 2D imagery; (c) Hough

transform to prune possible matches; (d) generalized RANSAC algorithm to find

in-lier matches; and (e) Lowe's algorithm to compute the 3D camera pose. There

are a number of innovations in this project: First is a new vanishing point

detection algorithm for aerial images of complex urban scenes. The detected

vanishing points provide coarse camera pose estimate and extract inherently 3D

information from 2D imagery. The second is feature point, 2D corner, detected

from images based on the 3D information captured by vanishing points. 2D

corners are used in point correspondences for camera pose refinement. The last

innovation is the overall system design and flow of the algorithmic steps

required to solve this problem. By taking advantage of the parallelism and

orthogonality inherently present in man-made structures, our system is able to

apply well-justified algorithms to provide a fast and truly automated camera

registration solution for texture mapping. Our proposed system can achieve 91%

accuracy in recovering camera pose for 90 oblique aerial imagery over the

downtown

More details on this project can be found in this paper.

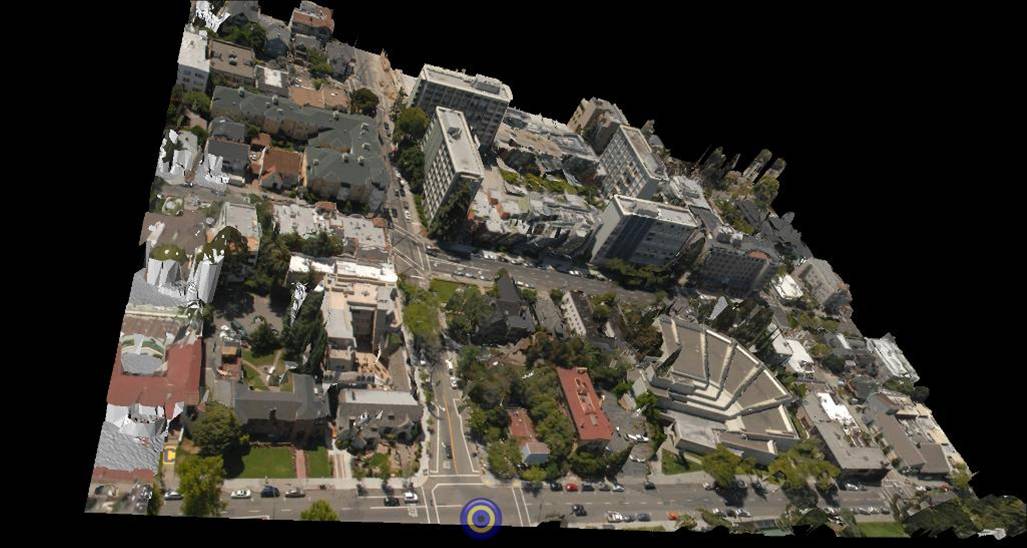

Figure 1: An example of a residential area in downtown

[1] C. Früh, R. Sammon, and A. Zakhor, "Automated Texture Mapping of 3D City Models With Oblique Aerial Imagery" 2nd International Symposium on 3D Data Processing, Visualization, and Transmission, Thessaloniki, Greece, September 2004, pp. 396-403.

Model Downloads

Below are several models of UC Berkeley campus and

residential areas at

1. Automatic Models: The following models were generated via (a) airborne laser scans to generate 3D geometry model, and (b) oblique aerial imagery which have been automatically texture mapped using the techniques described above. These models are in both VRML and .ive format. The .ive format files are post-processed beyond the .wrl models:

- Campus 3 (.wrl) (18 MB) Campus 3 (.ive) (54 MB)

- Campus 4 (.wrl) (18 MB) Campus 4 (.ive) (57 MB)

- Campus 5 (.wrl) (23 MB) Campus 5 (.ive) (67 MB)

- Campus 6 (.wrl) (16 MB) Campus 6 (.ive) (57 MB)

- Campus 7 (.wrl) (30 MB) Campus 7 (.ive) (91 MB)

- Campus 8 (.wrl) (21 MB) Campus 8 (.ive) (50 MB)

- Residential 3 (.wrl) (14 MB) Residential 3 (.ive) (35 MB)

- Residential 5 (.wrl) (11 MB) Residential 5 (.ive) (73 MB)

- Residential 7 (.wrl) (19 MB) Residential 7 (.ive) (47 MB)

- Downtown 1 (.wrl) (20 MB) Downtown 1 (.ive) (56 MB)

- Downtown 2 (.wrl) (24 MB) Downtown 2 (.ive) (58 MB)

- Downtown 3 (.wrl) (12 MB) Downtown 3 (.ive) (34 MB)

- Downtown 4 (.wrl) (39 MB) Downtown 4 (.ive) (68 MB)

- Downtown 5 (.wrl) (33 MB) Downtown 5 (.ive) (42 MB)

- Downtown 6 (.wrl) (30 MB) Downtown 6 (.ive) (44 MB)

The above models have been merged into the following three large .ive files to provide models of campus, residential and downtown:

- Combined 3D Campus (.ive) (486 MB)

- Combined Residential (.ive) (205 MB)

- Combined Downtown (.ive) (354 MB)

The above three .ive files for campus, residential and downtown have been merged into one large .ive file for the entire region

1. Manual Models: The following VRML models were generated via (a) airborne laser scans to generate 3D geometry model, and (b) oblique aerial pictures which have been manually texture mapped onto the 3D geometry model. These models are in VRML format.

- Campus 2 (17MB),

- Campus 3 (18MB),

- Campus 4 (17MB),

- Campus 5 (15MB),

- Campus 6 (20MB),

- Campus 7 (17MB),

- Campus 8 (19MB)

- Residential 1 (12MB),

- Residential 3 (17MB),

- Residential 5 (16MB),

- Residential 7 (19MB)

The above models have been merged into the following large .ive file to provide a model of the campus and surrounding residential areas:

- Combined Campus Model (348 MB)

- Combined Residential Model (161 MB)

- Combined Residential Campus model (633MB compressed)

2. The VRML model viewing software can be downloaded from BS Contact VRML/X3D 7.0.

3. To view the above combined model, you can download the IVE model viewing software: Open Scene Graph open source package devloped by the OSG community. Alternatively, you may download the precompiled version of Open Scene Graph 2.2.0: x86, x64 for 32 bit and 64 bit machines. It is advisable to view larger models on 64 bit machines.

4. Geographic Layout of the above models is shown here: C stands for Campus, D for Downtown, and R for Residential.

|

C1 X: 1199-1550 Y: 5124-5400 |

C2 X: 1549-1900 Y: 5124-5400 |

R1 X: 1899-2200 Y: 5124-5400 |

|

C3 X: 1199-1550 Y: 4849-5125 |

C4 X: 1549-1900 Y: 4849-5125 |

R3 X: 1899-2200 Y: 4849-5125 |

|

C5 X: 1199-1550 Y: 4574-4850 |

C6 X: 1549-1900 Y: 4574-4850 |

R5 X: 1899-2200 Y: 4574-4850 |

|

C7 X: 1199-1550 Y: 4299-4575 |

C8 X: 1549-1900 Y: 4299-4575 |

R7 X: 1899-2200 Y: 4299-4575 |

|

D1 X: 1199-1550 Y: 4024-4300 |

D2 X: 1549-1900 Y: 4024-4300 |

D3 X: 1899-2200 Y: 4024-4300 |

|

D4 X: 1199-1550 Y: 3749-4025 |

D5 X: 1549-1900 Y: 3749-4025 |

D6 X: 1899-2200 Y: 3749-4025 |