Automated, 3D Modeling of Building Interiors

Three-dimensional models of

objects, sites, buildings, structures, and urban environments made of geometry

and texture are important in a variety of civilian and military applications

such as training and simulation for disaster management, counteracting

terrorism, virtual heritage conservation, virtual museums, historical sites

documentation, mapping of hazardous sites and underground tunnels, and modeling

of industrial and power plants for design verification and manipulation.

While object modeling has

received a great deal of attention in recent years, 3D site modeling,

particularly for indoor environments, poses significant challenges. The main

objective of this proposal is to design, analyze, and develop architecture and

algorithms, as well as associated statistical and mathematical framework for a

human operated, portable, 3D indoor/outdoor modeling system, capable of

generating photo-realistic rendering of the internal structure of multi-story

buildings as well as external structure of a collection of buildings in a

campus.

Key technical challenges

consist of system architecture, sensor choice, calibration, and error

characterization, local and global localization algorithms, sensor integration,

registration, and fusion, and complete and accurate coverage of all

details. Using a human-operated backpack system equipped with 2D

laser scanners, cameras, and inertial measurement units, we develop four

scan-matching-based algorithms to localize the backpack and compare their

performance and tradeoffs. In addition, we show how the scan-matching-based

localization algorithms combined with an extra stage of image-based alignment

can be used for generating textured, photorealistic 3D models. We present

results for two datasets of a 30-meter-long indoor hallway.

Full

3D Models Available for Download

Download

3D models for both datasets 1 and 2 here. Note zip file size

is approx 280MB.

The

zip file includes two 3D models, one for dataset 1 and one for dataset 2. Both

datasets were acquired on July 1, 2009, on the fourth floor of Cory Hall at UC

Berkeley. The two datasets were acquired by two different human operators with

two different walking gaits. Each model is a textured, triangular mesh saved as

a .ive file, which is a native format of the software osgviewer. To view these

models first download OpenSceneGraph. Once you have

downloaded OpenSceneGraph, in Windows you should open a command prompt, cd to

the directory in which the .ive files are stored, and enter a command of the

form "osgviewer cory4_20090701_setX_blended.ive".

Screenshots

of 3D Models

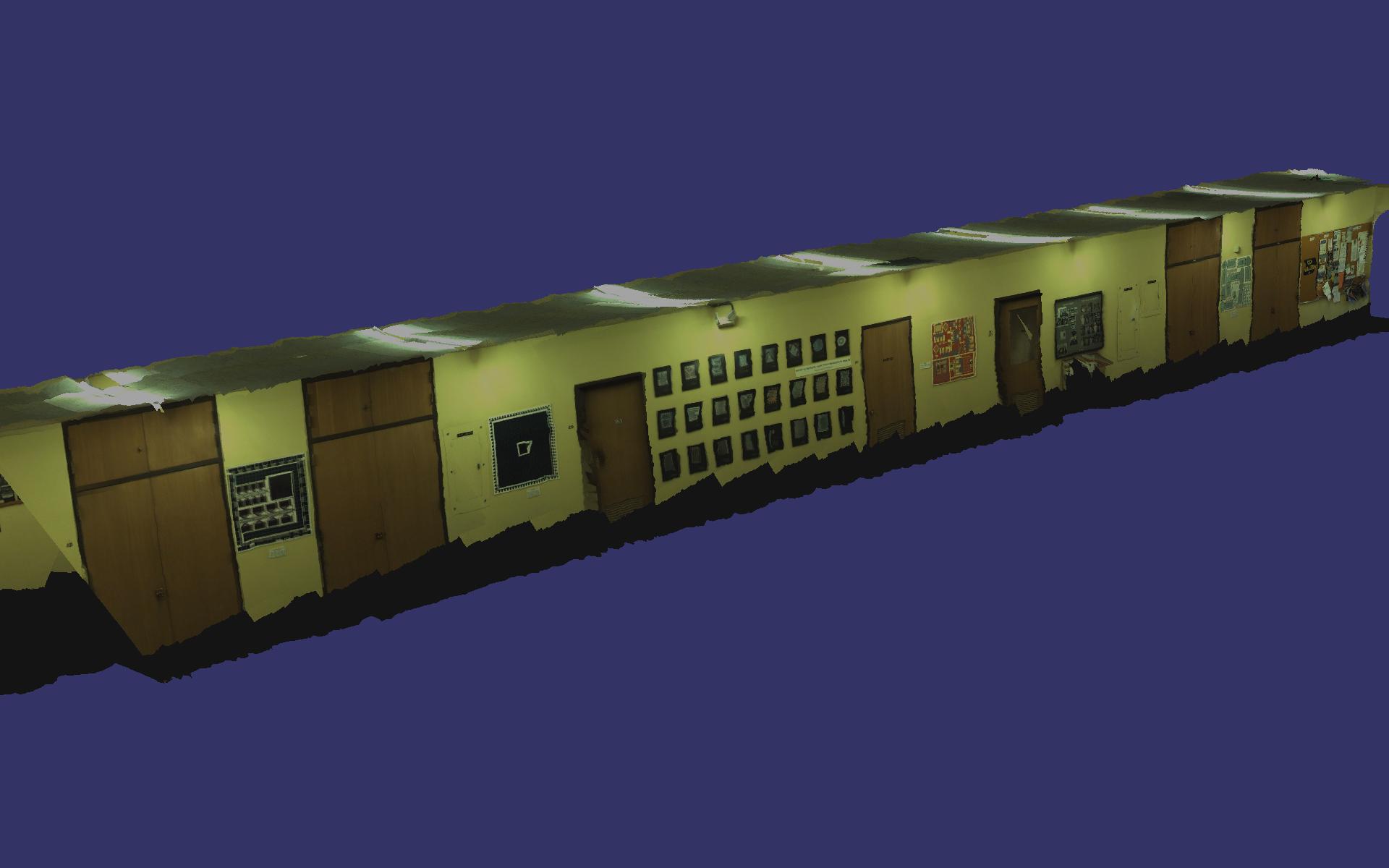

Here

are screenshots of the blended 3D model for dataset 1.

References

G. Chen, J. Kua, S. Shum, N. Naikal, M. Carlberg,

and A. Zakhor. "Indoor

Localization Algorithms for a Human-Operated Backpack System,"

submitted to 3D Data Processing, Visualization, and Transmission 2010, Paris,

France, May 2010. [Adobe PDF]

N. Naikal, J. Kua, G. Chen, and A. Zakhor. "Image Augmented Laser Scan Matching for Indoor Dead Reckoning," IEEE/RSJ International Conference on Intelligent RObots and Systems (IROS), St. Louis, MO, October 2009. [Adobe PDF]